In the vast, ever-expanding digital landscape, every website serves as its own unique island, crafted with care and creativity.Yet, much like the unseen currents that guide ships, ther are subtle forces at play that help direct the flow of online traffic. One such force is the humble but powerful robots.txt file—a tiny yet significant component of WordPress sites that communicates with search engine crawlers and bots. As we delve into the intricacies of this unassuming text file, we will explore what it is, why it matters, and the essential directives that should find their way into your WordPress robots.txt file. Join us on this journey to uncover the best practices for optimizing your site’s visibility while maintaining control over what gets shared and what remains private in the online realm.

The robots.txt file acts as a crucial interface between your website and web crawlers, mainly search engine bots. This plain text file is placed in the root directory of your WordPress site, guiding these crawlers on which parts of your site they are permitted to access and index. By effectively managing what content should be crawled or ignored,you help improve your site’s SEO and optimize the crawling process. A well-structured robots.txt file not only protects sensitive information but also ensures that search engines focus on the most valuable pages of your website.

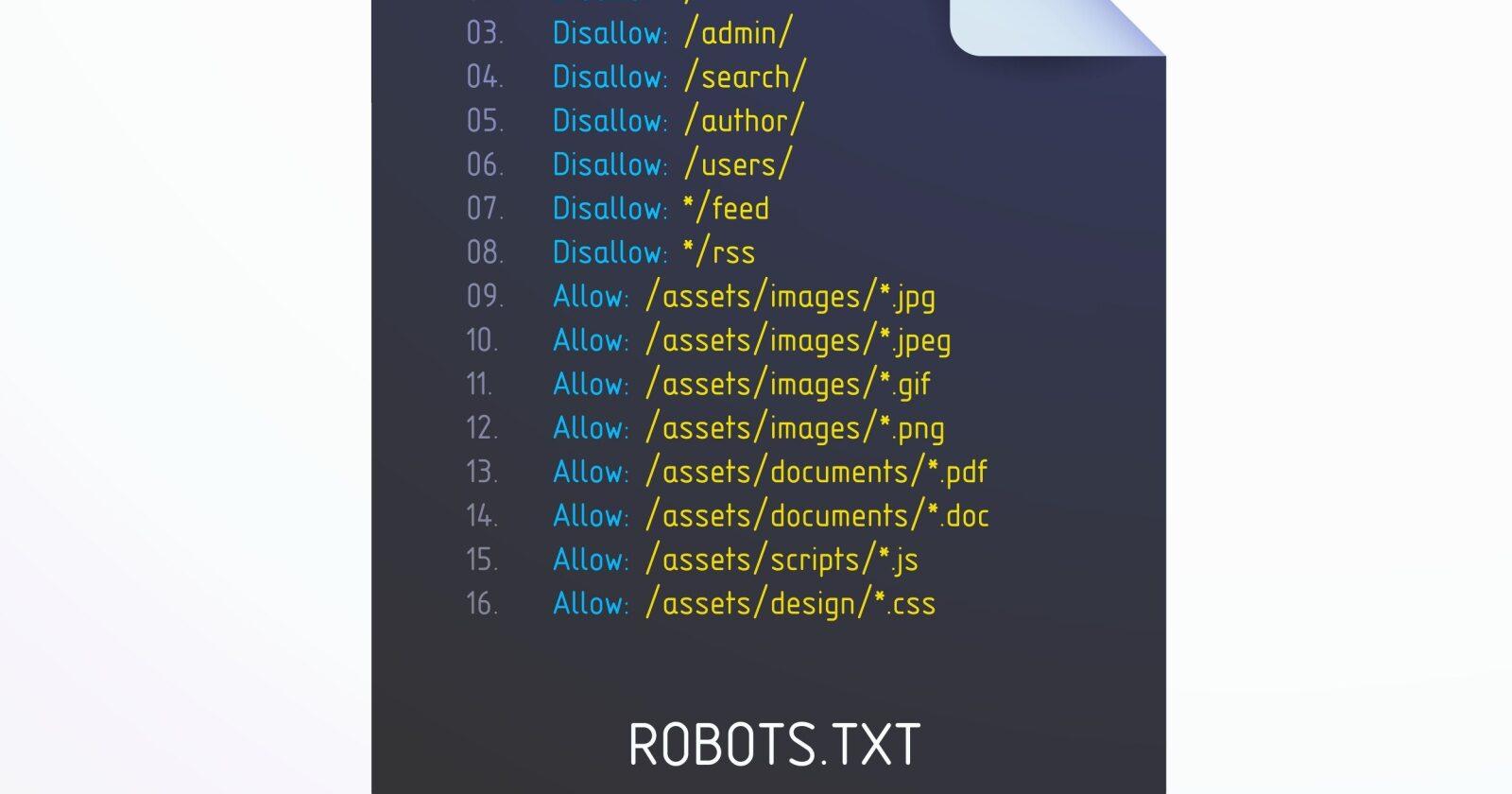

When configuring your robots.txt file in WordPress, you can include directives that reflect your site’s unique needs.Common elements to consider adding are:

Here’s a simple layout of what your robots.txt file might look like:

| Directive | Description |

|---|---|

| User-agent: * | Applies to all search engines. |

| Disallow: /wp-admin/ | Blocks access to the admin area. |

| Allow: /wp-admin/admin-ajax.php | Allows AJAX functionality in the admin area. |

| Sitemap: https://yourwebsite.com/sitemap.xml | Points to your XML sitemap. |

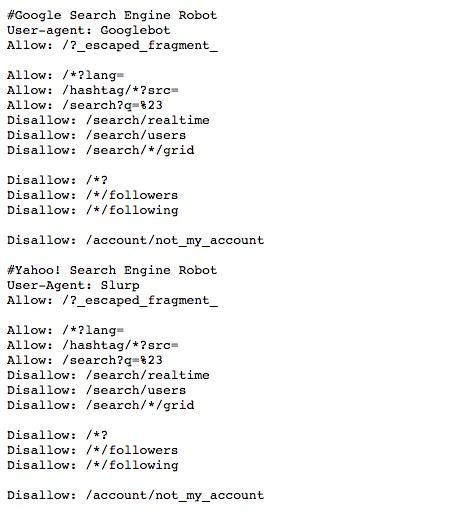

In crafting the perfect robots.txt file for your WordPress site, it’s crucial to implement rules that enhance your site’s SEO performance while protecting its integrity.Focus on allowing search engines to crawl essential pages while blocking access to sections that could dilute your site’s value. For instance, you should consider including directives like:

Moreover, incorporating a Sitemap declaration at the end of your robots.txt file can substantially aid search engines in effectively crawling your site. here’s a simple example to visualize:

| Directive | Description |

|---|---|

| User-agent: * | Targets all web crawlers. |

| Disallow: /wp-admin/ | Prevents indexing of sensitive admin pages. |

| Sitemap: http://yourwebsite.com/sitemap.xml | Informs crawlers where to find your sitemap. |

When configuring your robots.txt, it’s easy to overlook critical elements that can impact your site’s SEO and usability. One common mistake is blocking essential resources. This includes assets like CSS and JavaScript files, which are crucial for rendering your site correctly.When these files are blocked, search engine crawlers may struggle to understand your content, which can hinder indexing and ultimately affect your visibility. Make sure to double-check the paths specified in your robots.txt file to ensure you’re not inadvertently limiting access to necessary elements.

Another frequent error is using overly broad disallow rules. For instance, a simple directive like disallow: / can prevent all search engines from accessing your entire site. Instead,focus on more targeted approaches.Consider specifying which directories or files should be disallowed while leaving the rest open for crawling. It’s also vital to regularly review your robots.txt file to ensure it aligns with your current content strategy and doesn’t include any outdated rules. Here are some reminders:

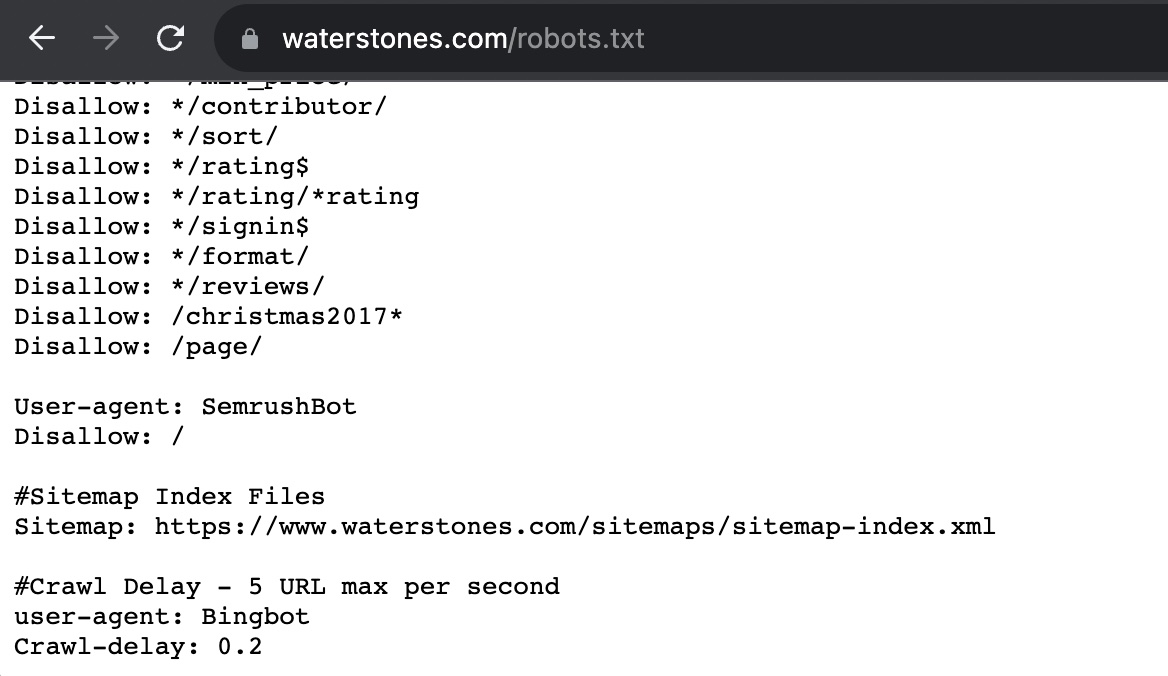

To ensure your robots.txt file is performing as intended, regular testing and monitoring are crucial. Utilize tools like Google Search Console to check how search engine crawlers interpret your directives. you can also manually inspect your file by entering your domain followed by /robots.txt in your browser to confirm that the content matches your expectations. Additionally, consider using online testing tools that can simulate how different search engines will interact with your directives.

When reviewing the effectiveness of your file, focus on the following aspects:

It’s also helpful to periodically update your robots.txt file as your website evolves. For example, if you introduce new sections or features, make sure to adjust your directives accordingly. Keeping your SEO strategies in line with your robots.txt configurations can significantly enhance your site’s visibility and performance.

your WordPress robots.txt file is more than just a simple text document; it’s a powerful tool that can shape the way search engines interact with your website.By carefully considering what to include, you can enhance your site’s crawlability, protect sensitive information, and ultimately improve your SEO strategy. As we’ve explored, striking the right balance between accessibility and privacy is key to optimizing your online presence.

As you continue to navigate the ever-evolving landscape of digital content, remember that your robots.txt file is not set in stone. Regularly review and update it in accordance with changes in your site or search engine algorithms. with a thoughtful approach to managing your directives, you’ll empower both search engines and users to engage more effectively with your WordPress site. Now, go ahead and take command of your digital space—your robots.txt is waiting to be crafted!