In an era where technology seamlessly intertwines with daily life, the emergence of artificial intelligence has ushered in transformative innovations across various sectors. Among these advancements, Meta’s AI bots have garnered significant attention, especially for their potential to engage with younger audiences. However, beneath the surface of these compelling advancements lies a pressing concern: the safety of children interacting with AI in virtual environments. As lawmakers grapple with the implications of these sophisticated tools,the challenge remains—how can we harness the benefits of AI while safeguarding our most vulnerable users? This article delves into the complexities of AI technology,highlighting the risks it poses to children and exploring the critical role Congress can play in ensuring a safer digital landscape for the next generation.

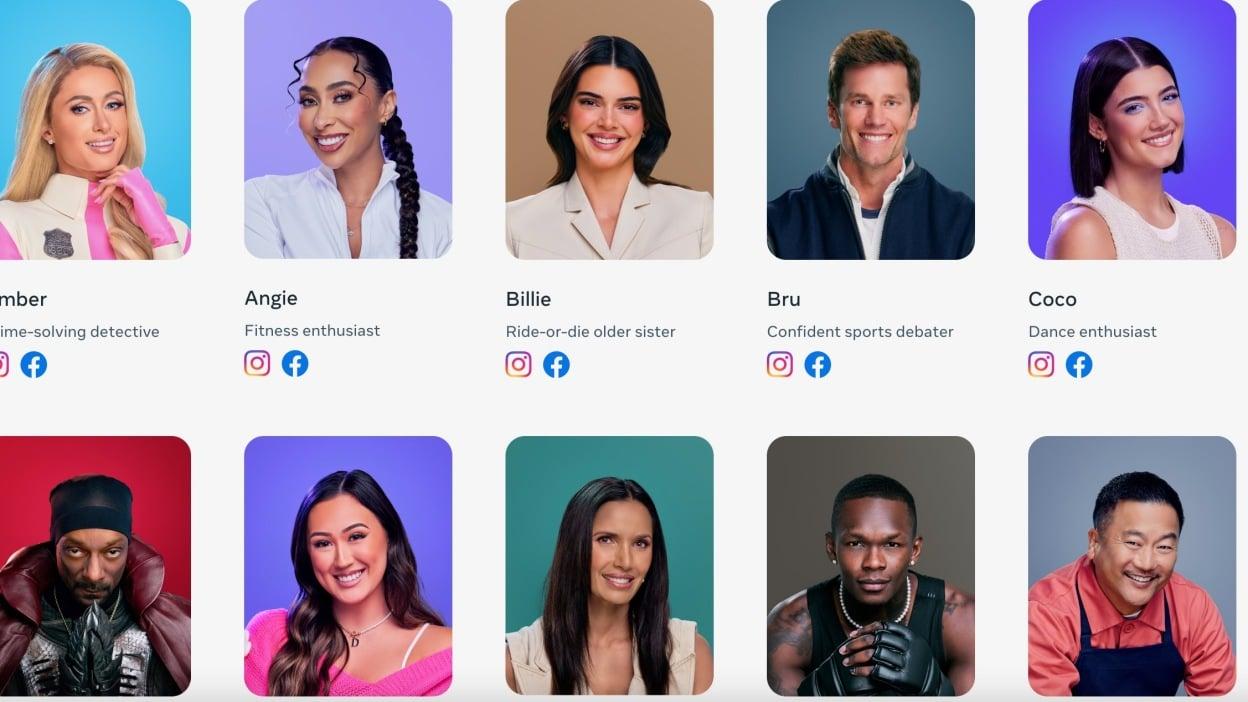

As Meta continues to launch advanced AI bots aimed at enhancing user interaction, there is growing concern regarding their implications for young users. These bots, designed to engage and entertain, can inadvertently expose children to a range of risks, including inappropriate content, privacy breaches, and manipulative advertising tactics. In an age where digital literacy is often lacking among youths, the disparity between their ability to critically assess details and the sophistication of these bots poses a significant challenge for parents and guardians.

Some potential dangers include:

To address these threats, regulatory measures must be implemented to establish guidelines around the use of AI technology in children’s platforms. Congressional oversight could ensure that companies prioritize user safety,introducing standards that protect vulnerable demographics while fostering a secure digital environment.

The incorporation of AI bots into platforms frequented by children presents a series of intricate challenges that demand serious attention. While these bots are designed to enhance engagement and learning, they can inadvertently expose young users to various risks. Some critical concerns include:

Furthermore, given the rapid evolution of AI technology, comprehension of its limitations remains essential. The following table highlights some of the primary vulnerabilities associated with AI bots’ interactions with children:

| Risk Factor | Description |

|---|---|

| Emotional Impact | Children may develop attachments to AI with emotional consequences. |

| Exposure to Misinformation | AI bots may inadvertently share false information. |

| Social Isolation | Increased reliance on AI for interaction can reduce real-world social skills. |

In an age where technology permeates every aspect of our lives, parents and guardians face the challenging task of understanding the implications of AI interactions on their children’s safety. With Meta’s AI bots perhaps exposing young users to inappropriate content or harmful behaviors, it is crucial for caregivers to be proactive in educating themselves about these digital companions. Establishing open lines of communication with children helps to foster an understanding of safe online practices. Key strategies include:

Navigating the landscape of AI technology can be daunting,yet knowledge is a powerful tool for guardians. Collaboration with local advocacy groups and participation in community workshops can enhance understanding of AI’s potential risks and benefits. Consider the following aspects when discussing AI interaction with children:

| Aspect | Consideration |

|---|---|

| Privacy Settings | Review and adjust according to age-appropriate use. |

| Content Exposure | Be aware of what topics or information are being presented to children. |

| Peer Interaction | Discuss the impact of peer influence in online environments. |

By equipping themselves with knowledge and fostering an environment of trust and discussions, parents and guardians can play an active role in ensuring that their children engage with AI in safe, constructive ways.As technology continues to evolve, so must our approaches to safeguarding the next generation.

As the digital landscape continues to evolve, it becomes increasingly essential for lawmakers to proactively establish sound policies that prioritize child safety. To address the concerns posed by AI bots, particularly those employed by major tech corporations like Meta, Congress must implement robust regulatory frameworks. Potential legislative measures might include:

By adopting a multifaceted approach that encompasses these initiatives, Congress can cultivate a safer digital ecosystem for children. One effective strategy in enhancing policy impact could involve collaboration with tech companies, parents, educators, and child advocacy groups. Through comprehensive public forums and consultations, lawmakers can gather vital insights to refine legislation.It’s also critical to monitor technological evolution continuously, ensuring that policies adapt to emerging threats posed by advanced AI systems.

| Legislative Action | Expected Outcome |

|---|---|

| Mandatory Age verification | Reduced access for underage users |

| Data Privacy Regulations | Enhanced protection of children’s data |

| Transparent Reporting Requirements | Greater accountability for tech companies |

| Implementing AI Ethics Standards | Safer AI interactions with minors |

In an era where technology continuously shapes the landscapes of our lives, the emergence of AI bots like those developed by Meta introduces both remarkable opportunities and undeniable challenges. While these bots promise innovative ways to engage and educate, they also cast a shadow of concern, particularly regarding the safety of our children in digital spaces. as we navigate this uncharted territory, it becomes imperative that policymakers remain vigilant and proactive. By enacting robust regulations and promoting transparent practices, congress holds the power to ensure that the digital playground remains a safe environment for our youngest users. Balancing innovation with protection is not just a choice; it is a responsibility we collectively share. As we look to the future, the call for thoughtful discourse and decisive action has never been more crucial. Together, we can forge a path where technology serves to uplift, educate, and protect the next generation.