In a rapidly evolving digital landscape, the power of social media platforms has never been more pronounced—or more scrutinized. As Meta, the parent company of Facebook and Instagram, grapples with increasing scrutiny over its content moderation practices, a series of lawsuits in Ghana has emerged that could set significant precedents. These legal challenges focus on the psychological and emotional toll that exposure to extreme content may have on the moderators tasked with policing the platforms. This article delves into the heart of the matter, exploring the implications of these lawsuits not just for Meta, but for the broader conversation around digital responsibility and the mental health of those working behind the screens. As we navigate this complex terrain, questions arise: How far does corporate responsibility extend? And what does it mean to safeguard the well-being of those who manage the vast oceans of information flowing through our online lives?

As Meta finds itself embroiled in multiple lawsuits in Ghana, the focus has shifted to the company’s controversial content moderation practices. Allegations claim that the sheer weight of exposure to extreme and often distressing content has taken a toll on the mental health of moderators tasked with sifting through millions of posts daily. through these legal challenges, a spotlight has been thrown on the ethical considerations of allowing human beings to endure graphic material as part of their job. Former moderators have come forward, sharing harrowing accounts of burnout, trauma, and the psychological impacts of working in environments where they are regularly faced with violent and unsettling content.

The ramifications of these allegations extend beyond individual experiences to broader questions about corporate responsibility. Critics argue that Meta has not adequately addressed the need for comprehensive support systems for moderators who deal with the fallout of extreme content. Key concerns highlighted in the lawsuits include:

the surge of extreme content on platforms like Meta has yielded profound consequences, particularly for the individuals tasked with moderating such material. These moderators frequently enough face a daily onslaught of graphic violence, hate speech, and distressing imagery, wich can contribute to a variety of mental health issues. The environment is often described as a “digital frontline,” where emotional fatigue, anxiety, and post-traumatic stress become commonplace. As the nature of content escalates,the psychological burden on these workers intensifies,highlighting the necessity for more robust support systems within the industry.

Moreover, studies indicate that the toll isn’t merely professional; it spills over into personal lives, affecting relationships and overall well-being. The following aspects illustrate the multifaceted impact of moderating extreme content:

A critical assessment of this issue reveals the urgency for better mental health resources, including therapy services and regular psychological check-ins for moderators. As this situation unfolds in courtrooms, further attention must be paid to the support structures required to safeguard the mental health of those who are, arguably, the unsung heroes of digital safety.

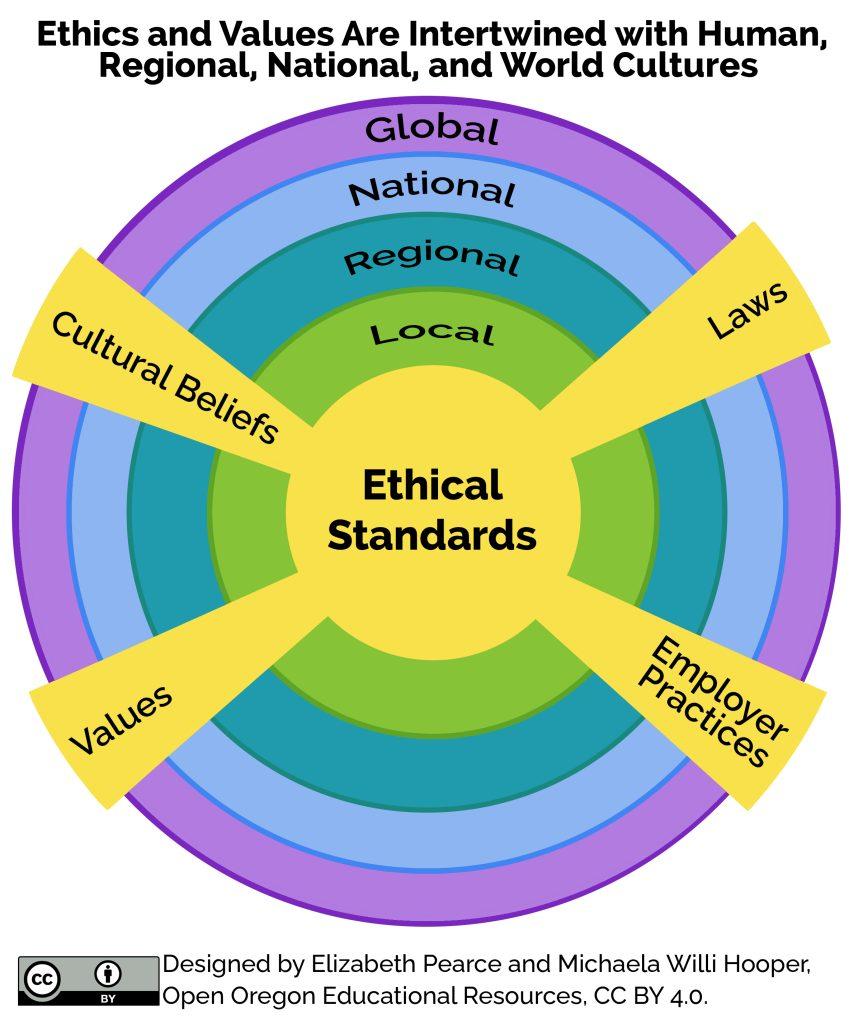

As discussions surrounding the lawsuits against Meta gain traction in Ghana, it becomes imperative to address the pressing need for ethical standards in content moderation.Key stakeholders, including technology companies, government entities, and civil society, must collaborate to create a framework that prioritizes the mental well-being of moderators while ensuring the integrity of the platforms. To achieve this, we recommend:

Moreover, developing a clear policy that defines ethical standards within content moderation can definitely help protect not only the moderators but also the communities they serve. Implementing a system where regular audits and evaluations of moderation practices occur could foster accountability.Organizations could consider:

| Evaluation Aspect | proposed Action |

|---|---|

| Effectiveness of content Removal | Regularly review and analyse the effects of moderation on user experience. |

| Moderators’ Workload | Ensure manageable workloads to prevent burnout and maintain morale. |

| Community Input | Incorporate user feedback on moderation practices to enhance transparency. |

The recent lawsuits against Meta in Ghana highlight the pressing need for companies to adopt a more accountable and transparent approach in managing the consequences of extreme content on their platforms. Stakeholders are increasingly scrutinizing how social media giants handle moderation, particularly in vulnerable regions where users may be disproportionately affected. Robust governance measures need to be implemented, ensuring that the moderation process is not only fair but also reflective of the diverse cultural context of its users.This scrutiny calls for a paradigm shift in operational protocols, emphasizing the importance of user safety and mental health among moderators.

To truly navigate the complexities of accountability,social media platforms must consider several key aspects:

In light of these factors, a collaborative approach with local governments, advocacy groups, and users themselves is vital. the table below illustrates potential initiatives for improved moderation and transparency:

| Initiative | Description | Expected Outcome |

|---|---|---|

| Community Workshops | Engagement sessions with users to discuss moderation practices. | Increased awareness and understanding of moderation policies. |

| Feedback Loops | Regular surveys to gauge user experiences with content moderation. | Improved moderation strategies based on user feedback. |

| Transparency Reports | Quarterly disclosures on moderation metrics and practices. | Enhanced trust and accountability from users. |

As the legal battles unfold in Ghana, the spotlight shines not only on Meta but also on the broader implications for content moderation across the globe. The concerns raised highlight a pivotal intersection of technology, ethics, and accountability in an increasingly digital world. As these lawsuits progress, they remind us of the urgent need for balance between innovation and the well-being of those who navigate the complexities of online content. Whether this legal scrutiny will lead to meaningful change remains to be seen, but it undeniably ushers in an important dialogue about the responsibilities of tech giants in protecting their workforce and those affected by their platforms. In the coming days, the tech industry will undoubtedly be watching closely, not just to see the outcome of these cases but to glean insights that could shape the future of content moderation everywhere.