In an era where digital dialog frequently enough dances on teh fine line between innovation and controversy, the unveiling of leaked guidelines from Meta AI has sparked both intrigue and debate.These documents, which illuminate the training methodologies behind one of the most talked-about chatbots in the tech landscape, offer a rare glimpse into the algorithms designed to discern sensitive content and navigate the complex terrain of societal norms. As artificial intelligence continues to evolve, understanding the mechanisms that guide its interactions becomes essential—not only for developers but also for users who engage with these digital entities. In this article,we delve into these guidelines,exploring how Meta AI approaches the delicate task of conversation while aiming to mitigate potential misunderstandings and uphold community standards. Join us as we unpack the intricacies of this technology and the implications of its boundaries in an increasingly interconnected world.

Meta AI employs a refined framework designed to meticulously classify sensitive content, ensuring that conversations remain respectful and within community guidelines. The guidelines reveal a layered approach, including an extensive database of terms and phrases categorized by sensitivity. The three primary categories include:

Through the integration of machine learning algorithms and human oversight, the AI is trained to flag, review, and possibly remove content that crosses these boundaries. A pivotal part of this classification process involves reviewing context and intent, not just keywords. The AI utilizes a scoring system to assess the potential impact of the identified content, which is outlined in the following table:

| Impact Level | Description |

|---|---|

| Low | Content that might potentially be sensitive but does not pose a critically important risk of harm. |

| Medium | Content that is potentially harmful and may incite discussion or conflict. |

| High | Content that is likely to led to real-world consequences or severe distress. |

The meticulous training process of Meta’s chatbot is underpinned by innovative techniques designed to navigate complex dialogues while deftly avoiding controversy. A fundamental aspect of this training involves the systematic categorization of sensitive content,which encompasses topics that could potentially elicit strong emotional reactions or ethical dilemmas.By leveraging vast datasets that include flagged interactions and nuanced discussions,the chatbot learns to identify content within the following categories:

to enhance its decision-making capabilities,the chatbot undergoes continuous reinforcement training. This involves iterative learning where feedback from user interactions informs its responses, allowing it to gradually refine its approach. A pivotal part of this process is the application of dynamic response generation,enabling the chatbot to adapt its replies based on real-time context and previously encountered scenarios. Below is an illustrative table showcasing the guiding principles that frame the chatbot’s functionality:

| Guiding Principle | Description |

|---|---|

| Contextual Awareness | Understanding the nuances of conversation flow. |

| Empathy-driven responses | Creating emotionally clever interactions. |

| Automated Flagging | Instinctively recognizing and addressing potential pitfalls. |

In the ever-evolving landscape of AI, maintaining a balance between sensitivity and user engagement is crucial. Chatbots, like those developed by Meta, are meticulously trained to navigate the complexities of human conversation, ensuring that interactions are both informative and empathetic. The guidelines that have recently surfaced reveal a multi-faceted approach where sensitivity to various topics is prioritized. By employing techniques such as keyword recognition and context analysis, these AI systems can effectively identify and respond to sensitive content, minimizing the risk of offending users. This capability not only safeguards the user experience but also promotes a sense of trust and safety in digital communication.

Moreover, successful engagement isn’t solely about avoiding controversy; it entails fostering meaningful dialogues that respect diverse perspectives.Therefore, AI responses are crafted with an understanding of nuances in tone and language. Key strategies include:

An effective response strategy is encapsulated in the following table, illustrating how sensitivity and engagement can go hand in hand:

| Goal | Approach |

|---|---|

| Require sensitivity to potential triggers | Implement context-aware filtering |

| Enhance user engagement | Incorporate relatable examples and stories |

| Minimize the risk of offense | Adopt conversational norms of respect |

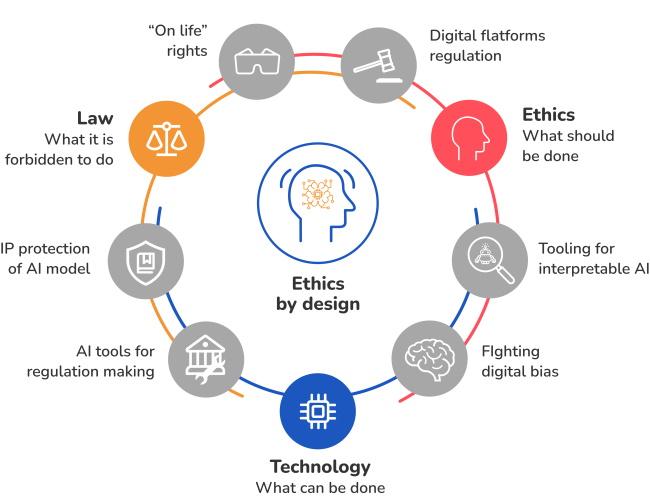

To foster responsible AI development and mitigate risks associated with sensitive content, several best practices can be distilled from Meta’s guidelines.Key principles focus on ensuring transparency, accountability, and sensitivity in AI design and deployment. Developers are encouraged to maintain open lines of communication with stakeholders, allowing for constructive feedback and enabling the identification of potentially harmful biases within AI systems. This participatory approach builds trust and encourages the ethical use of technology.

Moreover, implementing continuous learning mechanisms is vital for refining the effectiveness of AI tools. Regular audits and assessments should be conducted to evaluate AI behavior in real-world scenarios. Below are some recommended strategies:

| Principle | Action |

|---|---|

| Transparency | Clearly disclose AI capabilities and limitations. |

| Accountability | Establish processes for addressing AI failures. |

| Sensitivity | Prioritize user safety by minimizing exposure to harmful content. |

In a world where the digital landscape is ever-evolving, the recent leak of Meta’s AI guidelines sheds light on the delicate balance between innovation and duty. As chatbots become integral to our daily interactions, understanding the methodologies behind their training provides valuable insight into how they navigate sensitive topics and steer clear of controversy. While these guidelines raise questions about transparency and the ethical implications of AI, they also highlight the ongoing efforts to create a more thoughtful and considerate technological surroundings.Moving forward, it is essential for both developers and users to remain vigilant, ensuring that the tools designed to enhance our communication are grounded in principles that respect and understand the diverse tapestry of human experience. As we continue to explore the boundaries of AI technology, let us remain engaged in the conversation, advocating for a future where responsible innovation thrives.