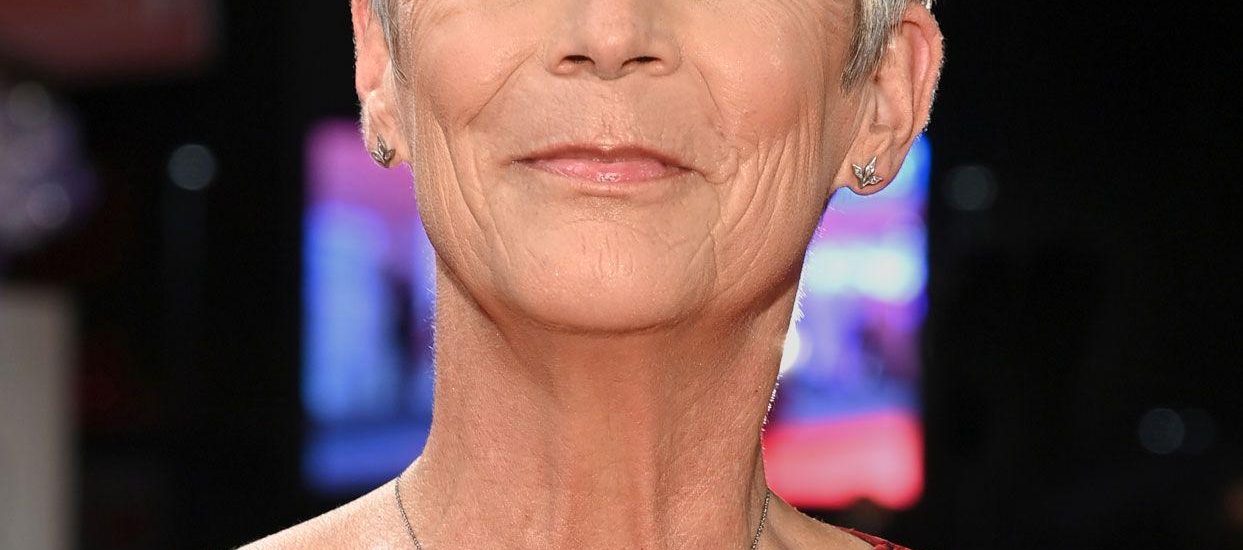

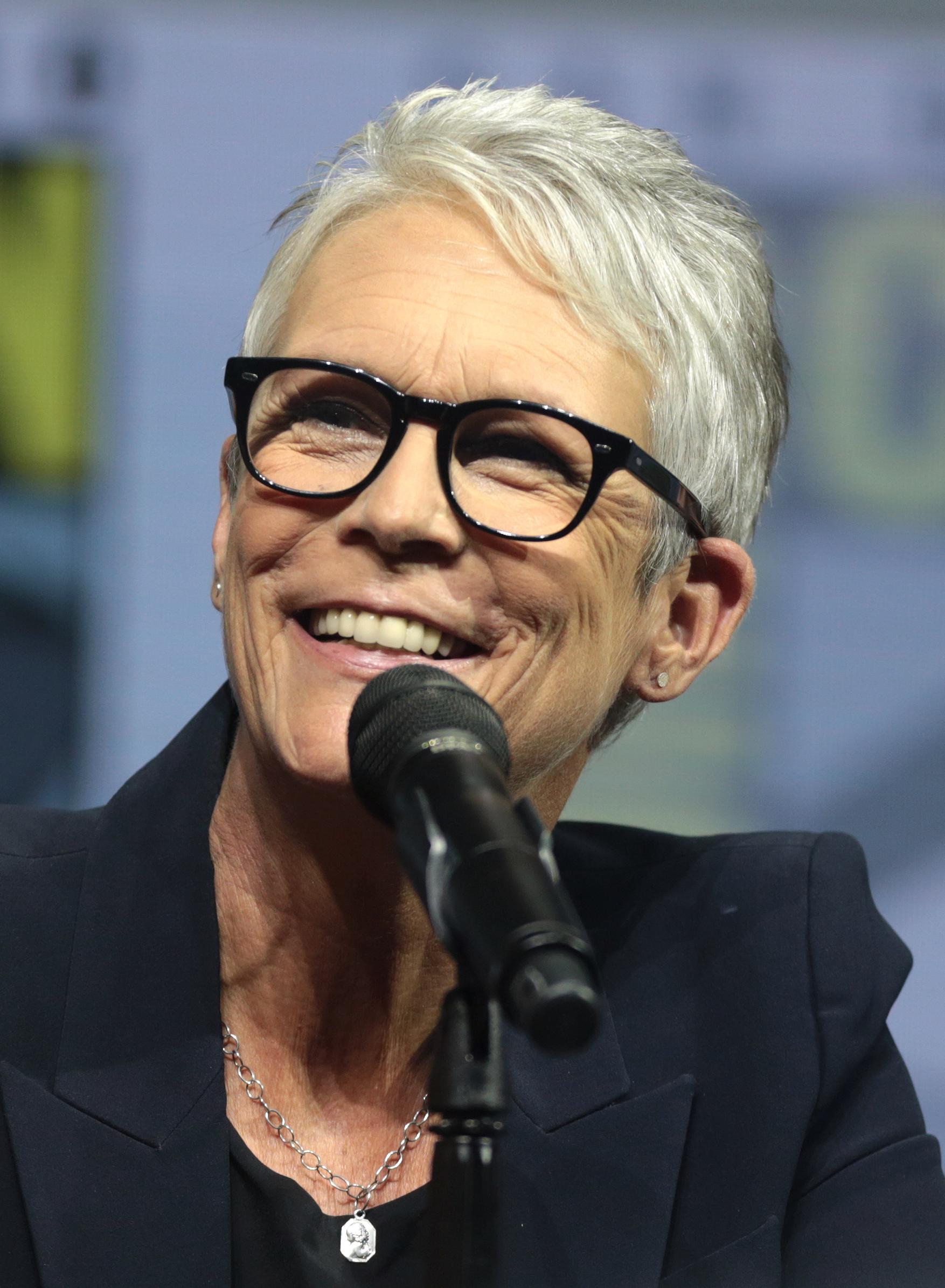

In the ever-evolving landscape of technology and digital expression,the lines between reality and illusion are becoming increasingly blurred. At the forefront of this discussion is actor and advocate Jamie Lee Curtis, who has taken a bold step in addressing an unsettling trend: the proliferation of AI-generated deepfake videos. Recently, she publicly called out Mark Zuckerberg and his company Meta, highlighting concerns about their apparent indifference to the misuse of advanced artificial intelligence in creating deceptive content. As the intersection of celebrity, technology, and ethics sparks heated debate, Curtis’s remarks challenge not only corporate responsibility but also our collective understanding of authenticity in an age dominated by pixels and algorithms. This article delves into the implications of her statement, the impact of deepfake technology, and the urgent need for accountability in the digital realm.

In a bold move, Jamie Lee Curtis has taken to social media to voice her significant concerns about the proliferation of AI deepfake videos, notably those that could perhaps harm reputations and trust in the digital age. She is directing her criticisms towards tech giants like Mark Zuckerberg and Meta for their apparent inaction in combating the misuse of artificial intelligence. Curtis emphasized that the rise in manipulated media can lead to serious consequences, undermining authenticity and creating a hazardous surroundings for individuals and public figures alike. In her passionate defence of digital integrity, she laid out several key issues that need immediate addressing:

To further illustrate the growing issue, a rapid glance at recent statistics reveals an alarming trend in the increase of deepfake videos. As Curtis pointed out, it’s critical for platforms like Meta to take responsibility for the content shared on their networks. The table below provides a snapshot of reported incidents involving deepfakes in the last year, highlighting the urgency of action:

| Type of Incident | Reported Cases (2022) | Impact Level |

|---|---|---|

| Political Manipulation | 250+ | high |

| celebrity Impersonation | 150+ | Medium |

| Fraudulent Activities | 100+ | Critical |

The ramifications of neglecting the rising tide of deepfake content are profound, stretching far beyond the surface of entertainment and into the realms of social responsibility and ethics. As individuals leverage artificial intelligence to manipulate visuals, they not only produce entertainment but also sow distrust and misinformation. The consequences include a devaluation of truth in media, reputational damage to individuals caught in the crossfire, and an environment where anyone can become a target of digital harassment or defamation. without decisive action from companies like Meta, the proliferation of these deceptive technologies could undermine the very fabric of societal trust.

Addressing deepfake videos demands a multi-faceted approach, with ethical considerations at its core. Stakeholders within the tech industry must prioritize the establishment of clear guidelines and protocols to combat this challenge. Key aspects to consider include:

To illustrate the potential dangers of ignoring this phenomenon, consider the following:

| Aspect | Potential Impact |

|---|---|

| public Trust | Decline in confidence towards media sources. |

| Legal Repercussions | Increased lawsuits against platforms for harm caused by deepfakes. |

| Social Divide | Heightened polarization due to manipulated narratives. |

In light of the ongoing challenges regarding deepfake technology and its implications for media integrity, several innovative solutions have emerged to enhance accountability and transparency within digital platforms. One of the most promising approaches involves the development of robust verification systems that can authenticate the origin of videos and images. By implementing advanced AI algorithms, these systems can detect alterations made to media files, thereby enabling users to identify potential misinformation before sharing. Additionally, fostering collaboration between technology companies, regulatory bodies, and media organizations is essential to create a unified standard for content verification.

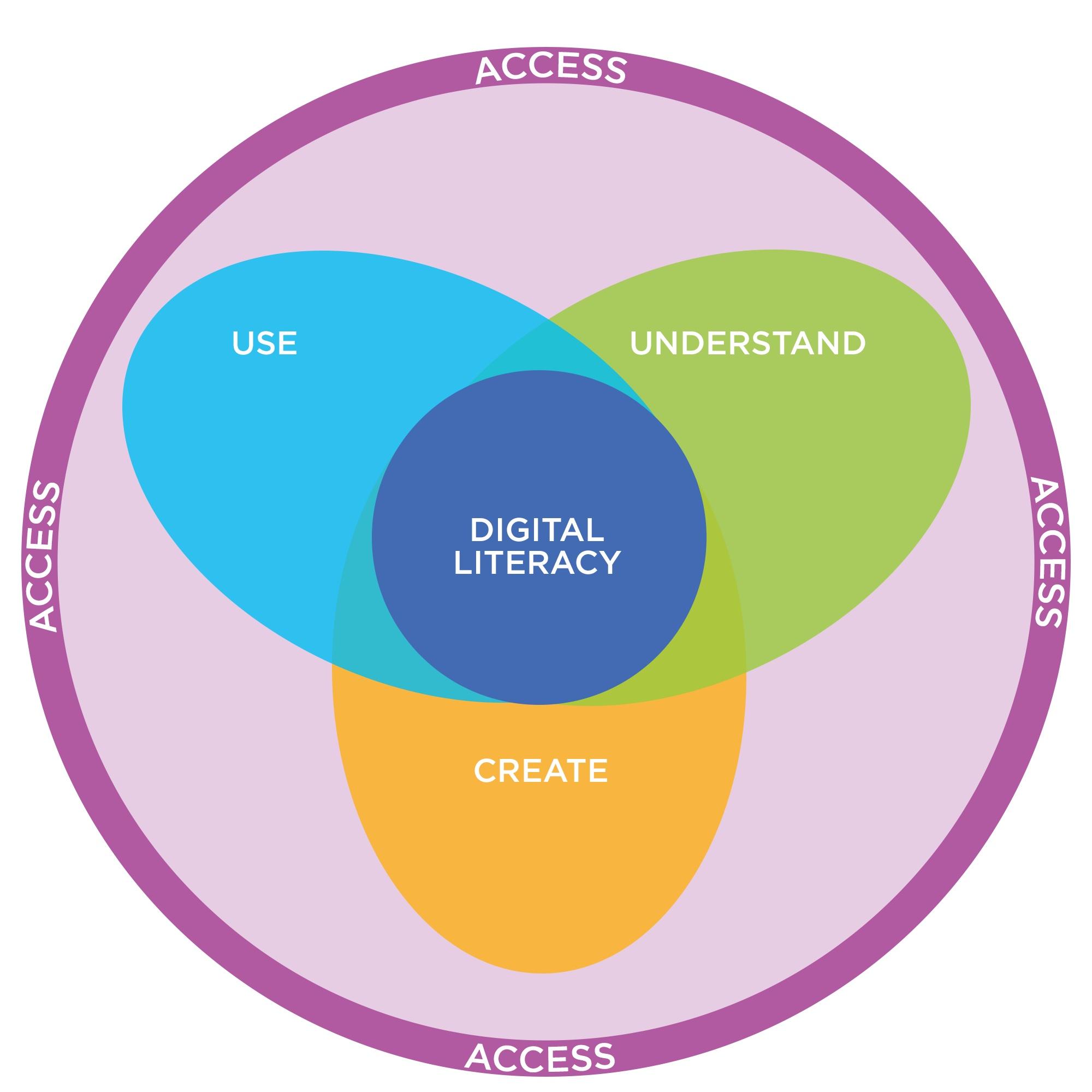

Furthermore, increasing user awareness and education about digital literacy is crucial. Users equipped with the knowledge to critically assess the authenticity of content can considerably mitigate the spread of harmful deepfakes. Key strategies to implement include:

Ultimately, a multi-faceted approach that combines technology, education, and community engagement will be instrumental in paving the way for a more accountable media landscape.

As the digital landscape evolves, the responsibility of tech giants like Meta intensifies, especially when it comes to safeguarding users from malicious content. The recent outcry from Jamie Lee Curtis brings to light several pressing concerns regarding the effectiveness of existing policies against AI-generated deepfake videos.with technology rapidly advancing, the capacity for misinformation grows, prompting a vital discussion about how well these platforms are equipped to confront such challenges. The inability to promptly address flagged content—especially that which can cause significant harm—signals a broader issue about compliance with ethical standards in content moderation.

To truly enhance user safety, large tech companies must undertake a multifaceted approach, including but not limited to:

| Challenge | proposed solution |

|---|---|

| Inadequate monitoring of AI misuse | Invest in dedicated AI research teams |

| Slow response to flagged content | Increase moderation resources and staffing |

| Lack of user education on deepfakes | Launch awareness campaigns and educational programs |

In the ever-evolving landscape of technology, where the lines between reality and fabrication blur, Jamie Lee Curtis’s bold stand against Mark Zuckerberg and Meta serves as a timely reminder of the responsibilities that accompany innovation. The emergence of AI deepfake technology has sparked a profound dialog about ethics, accountability, and the need for proactive measures in safeguarding digital integrity. As discussions continue to unfold,Curtis’s call to action highlights the importance of holding tech giants accountable for the implications of their platforms,urging us all to reflect on the intersection of creativity and responsibility in the digital age. as we navigate this complex terrain,one thing is clear: the conversation is just beginning,and the stakes have never been higher.