In an increasingly digital world, social media platforms have become the new public squares, bustling with a cacophony of voices and views. Yet, hidden behind the vibrant façade of connectivity lies a darker reality that few see. For those tasked with moderating this vast expanse of content, the burden can be overwhelming. In a striking account, one former Meta moderator sheds light on the harrowing psychological toll of sifting through graphic images and videos, including beheadings and child abuse. This article delves into the personal experiences of a moderator who faced an unrelenting barrage of distressing material, ultimately leading to a profound breakdown. As we traverse this unsettling territory, we explore the human cost of content moderation and the urgent need for dialog on its implications for mental health in the digital age.

The stories of content moderators often remain hidden behind the glossy surface of social media platforms, yet they reveal a stark reality that many would prefer to ignore. Moderators are the unseen gatekeepers, tasked with the relentless job of filtering out graphic material, including violence and explicit abuse. Their responsibilities can lead to severe psychological ramifications, as illustrated by one Meta moderator who recounted the anguish of witnessing horrific images day in and day out. The brutal nature of their work can disrupt basic human needs and lead to debilitating conditions, such as anxiety and insomnia. It’s not uncommon for these individuals to experience an overwhelming sense of helplessness and depression, resulting in a life that’s drastically altered by their roles.

Consider the daily struggles these moderators face:

| mental Health Effects | Possible Symptoms |

|---|---|

| Post-Traumatic Stress | Flashbacks, anxiety |

| Depression | Loss of interest, fatigue |

| Burnout | Emotional exhaustion, detachment |

This pressing issue raises an vital conversation about the ethics of content moderation and the essential support systems needed for those who perform this essential but harrowing work. The balance between safeguarding users and protecting the mental well-being of moderators is precarious and calls for immediate attention and innovative solutions.

The psychological toll of moderating graphic material can be devastating, often leading to severe emotional distress and mental health challenges. Individuals in these roles frequently report feelings of isolation,anxiety,and overwhelming sadness due to constant exposure to disturbing images and videos. Common experiences include:

These mental health challenges can ripple through various aspects of life, impacting relationships and work. the emotional burden can lead to physical symptoms, such as chronic fatigue or gastrointestinal issues, as the mind struggles to cope with processed trauma. Many moderators eventually seek psychological support, underscoring the importance of implementing preventive mental health strategies within organizations. A supportive environment may include:

In high-stress environments, especially for moderators dealing with graphic content, the importance of resilience can’t be overstated. A few key strategies can help individuals maintain their mental fortitude amidst the chaos. Frist, creating a personal support network allows moderators to share their experiences without fear of judgment, which can be vital for emotional relief. Additionally, *establishing boundaries* around work hours ensures that one has time to decompress and engage in self-care activities. Moderators should consider adopting mindfulness practices such as meditation or deep-breathing exercises to manage stress effectively.

Implementing a regular schedule for breaks can also be beneficial, encouraging a rhythm that balances periods of intense focus with moments of rest. Here are several strategies that can promote resilience:

By prioritizing these resilience-building practices, moderators can better navigate the psychological toll of their work, ensuring they have the strength to face the challenges of their role.

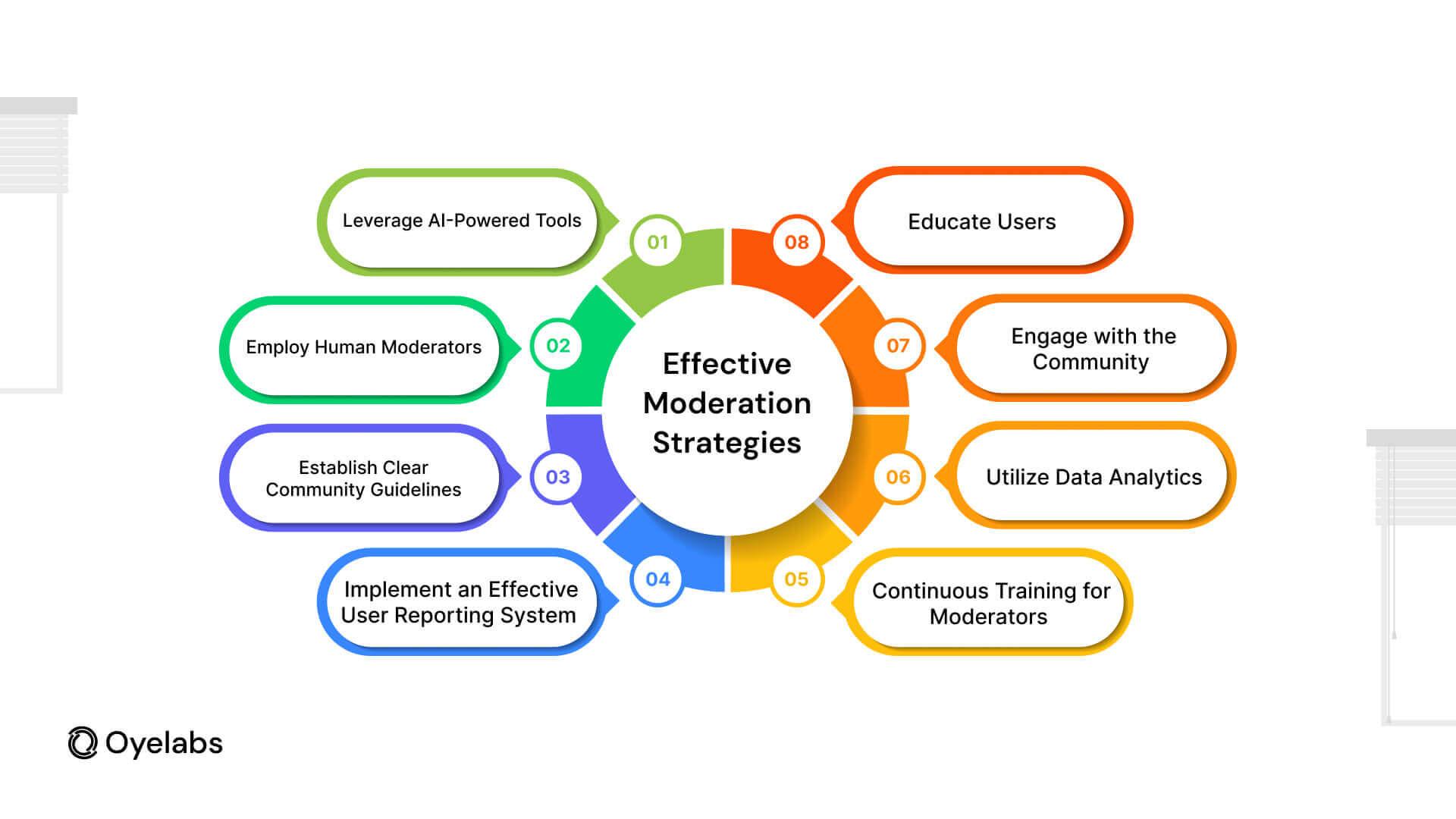

The alarming experiences of moderators exposed to extreme content underscore the urgent need for tech companies to prioritize mental health support for their employees. These individuals often encounter graphic material that can lead to severe psychological impacts, including anxiety, depression, and symptoms resembling PTSD. tech companies should consider implementing the following initiatives to create a more nurturing work environment:

In addition to supportive practices, tech companies must actively foster a culture that recognizes the emotional toll of moderation. Educational training sessions can raise awareness about the challenges moderators face and build empathy across teams. Additionally, companies can offer thorough debriefing opportunities post-shift, which provide a safe space for moderators to express their feelings and decompress. Consider the following strategies:

| Strategy | Description |

|---|---|

| Education Initiatives | Host workshops focused on trauma awareness and emotional resilience. |

| Open Dialogue | Create forums where moderators can converse openly about their experiences without stigma. |

| Resource Accessibility | Make mental health resources easily available, including helplines and therapy access. |

As we reflect on the harrowing experiences shared by our featured Meta moderator, it becomes clear that the toll of digital oversight extends far beyond the click of a mouse. The shadows cast by the grotesque content they encounter daily illuminate the urgent need for a compassionate approach to online moderation and mental health support. In a world increasingly defined by its virtual landscapes, acknowledging the human cost of policing such spaces is essential. As we navigate the complexities of technology, let us not forget the stewards of our digital realms—individuals who bear witness to humanity’s darkest corners. It is indeed in understanding their stories that we may foster a safer, more humane online environment for all.