In a rapidly evolving digital landscape where artificial intelligence plays an increasingly pivotal role, Meta has unveiled its latest brainchild: Llama 4. This state-of-the-art model promises to redefine the boundaries of machine learning with its expanded capabilities and refined features. Boasting larger neural architectures, enhanced visual comprehension, and a remarkable ability to process multiple modalities, Llama 4 stands at the forefront of AI innovation. As we delve into the intricacies of this groundbreaking system, we’ll explore how it not only amplifies Meta’s ambitious vision for the future of technology but also sets a new standard in the realm of artificial intelligence. Join us as we unpack the advancements that make Llama 4 a monumental leap forward in the quest for smarter, more interconnected machines.

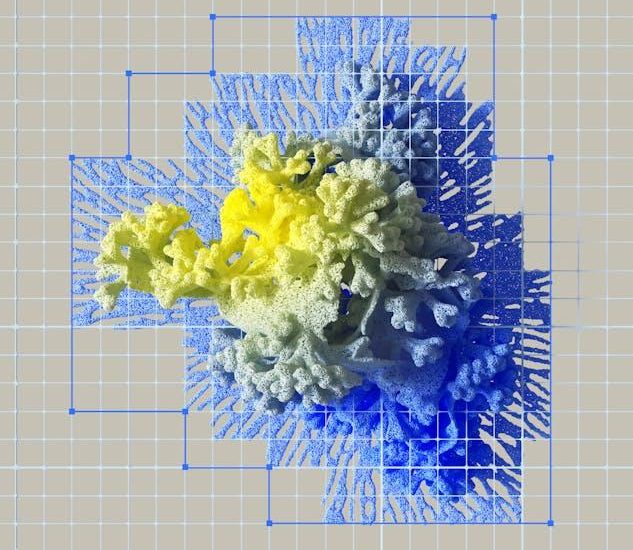

The latest iteration of Meta’s Llama series marks a important leap forward in artificial intelligence, expanding its capabilities beyond conventional bounds. With a larger neural architecture, Llama 4 boasts enhanced processing power, enabling it to understand and generate human language with unprecedented accuracy and fluidity. This evolution isn’t just about size; it’s fundamentally about how AI interacts with the world. The introduction of multimodal functionalities allows the model to interpret and respond to text, images, and audio, creating a truly integrated experience. Key features include:

The technological advancements in Llama 4 are reflected not just in its functionalities but also in its practical applications across diverse sectors. From healthcare to finance, its robust performance sets a new standard for buisness applications. A clear demonstration of this evolution can be viewed in the following table, which highlights key capabilities in comparison to its predecessor:

| Capability | Llama 3 | Llama 4 |

|---|---|---|

| Language Comprehension | High | Very High |

| Multimodal Integration | No | Yes |

| Response Creativity | Moderate | High |

The neural architecture of Llama 4 represents a significant evolution in the understanding of multi-modal processing and deep learning. at its core, this architecture is built on a transformer framework that encompasses both dense and sparse attention mechanisms, enabling it to manage vast amounts of contextual data with incredible efficiency. Key features include:

The scalability of Llama 4’s architecture enables it to effectively tackle a variety of tasks, from natural language processing to image synthesis. A notable aspect of this system is its ability to learn from smaller datasets while still outperforming predecessors in broader applications. the following table highlights some of the architectural advancements:

| feature | Description |

|---|---|

| Increased Parameters | More neurons to enhance model complexity and capability. |

| efficient Layer Stacking | Optimized stacking for faster processing times without loss of depth. |

| Reinforcement Learning integration | Utilizes feedback loops for continuous enhancement in decision-making. |

In an era where artificial intelligence continually rewrites the boundaries of possibility, Llama 4 emerges as a pioneering force, effortlessly integrating various modalities. This remarkable model combines text, image, and even audio processing to create an unparalleled user experience. Unlike its predecessors, Llama 4 is not limited to mere textual comprehension; it embraces a holistic approach that allows it to understand and interact with diverse forms of input. Imagine the potential applications:

Llama 4 doesn’t just process different types of data; it understands the relationships between them, enhancing its ability to generate insights and assist users. The framework allows for seamless integration, making it easier than ever to leverage multiple data sources for richer outcomes. Here is a brief overview of its multi-modal capabilities:

| Modality | Capability |

|---|---|

| Text | Advanced natural language understanding and generation. |

| Image | Object recognition and scene description. |

| Audio | Voice recognition and speech synthesis. |

With its enhanced capabilities, Llama 4 opens the door to a myriad of innovative solutions across various industries. By integrating this advanced model,businesses can streamline operations,boost customer engagement,and harness data more effectively. Here are some practical applications where Llama 4 excels:

Furthermore, Llama 4’s multimodal capabilities allow it to process and analyze data across different formats—text, images, and audio. This ability can be harnessed for unique applications such as:

| Submission Area | Example Use Case |

|---|---|

| Healthcare | Real-time interpretation of patient data and medical imagery to assist in diagnostics. |

| Education | Interactive learning experiences combining visual and auditory content tailored to individual learning styles. |

| Entertainment | Creating immersive content that adapts based on audience feedback and preferences. |

As we conclude our exploration of Meta’s Llama 4, it’s clear that the advancements in artificial intelligence are paving the way for a new era of interconnected, multifaceted communication. With its bigger brains, sharper vision, and diverse modalities, llama 4 stands as a testament to the remarkable progress in AI technology. This powerful model not only enhances our engagement with digital platforms but also opens up a myriad of possibilities for future applications across various fields. As we look ahead, the integration of such innovations into our daily lives invites us to ponder the implications of these developments. what new horizons will be unveiled as we continue to harness the capabilities of Llama 4 and its successors? Only time will tell. Until then,the journey into the realm of advanced AI continues,promising to transform the way we interact with the world around us.